2023 was a busy year for the development of Broadcast Bridge, run by our sister company Everycast Labs. We completely rewrote the desktop app and released a host of new features.

Everycast Labs’ aim is to make Broadcast Bridge as flexible as possible for video professionals, and this includes supporting several ways to input media streams.

We’ve built support in their cloud media server for the two main protocols for secure, low-latency media streaming: WHIP and SRT. You can read about what WHIP is and the details of their WHIP input feature in the Everycast Labs blog.

Today, I’d like to talk about what SRT is and how we built support for it in the Broadcast Bridge media server.

What is SRT?

SRT stands for Secure Reliable Transport and is an open source protocol for the transmission of media and data across unreliable networks, namely, the Internet. The protocol was developed by Haivision and the SRT Alliance now includes hundreds of companies.

SRT uses UDP as the transport layer, offers a latency buffer for packet loss recovery and supports encryption. Additionally, SRT is codec-agnostic, so it offers great flexibility for video professionals wanting to transfer their own media.

SRT transmission options can be set by adding parameters to the endpoint URL, for example:

srt://192.168.0.5:5000?mode=caller&streamid=12345&passphrase=secret

This will make a connection with a local address at port 5000, enforcing caller mode, specifying a stream ID of “12345” and a bearer token of “secret”.

More info about options can be found in the SRT documentation.

One commonly-used option is the latency buffer, which can be set from a value of milliseconds to prioritise low latency, to a few seconds to maximise packet loss recovery.

Why use SRT?

Low latency, resilience to poor network conditions and flexibility are driving a widespread adoption of SRT streaming among video producers and AV companies, from small teams to major players like Fox News and the NFL, as seen in the video on the SRT About page. Its main uses vary from video contribution from remote sites to broadcast return feeds.

Traditionally, resilient, flexible, low latency feeds would be achieved with satellite transmission or a private fibre link. While these methods mean they are much less likely to incur in data loss compared to the public Internet, they are also very expensive. SRT (like WebRTC), on the other hand, can get almost the same end result for a much lower cost, so it is a great choice for smaller productions with a lower budget, as well as a good fallback for those who are using satellite or fibre.

How Broadcast Bridge supports SRT

It all starts with Donut, which is a Pion-based open source project that takes an SRT stream and converts it to WebRTC to display it in a browser.

We made a fork to add WHIP support, so that the WebRTC stream can be easily sent to a compatible destination; in our case, the Broadcast Bridge media server. In this way, when a Broadcast Bridge SRT endpoint receives a request, Donut immediately converts the stream to WebRTC and sends it to the correct WHIP endpoint in the media server. As both the Donut and media server instances run on the same server, there is no quality degradation in this step.

What about codec support? While SRT is technically codec-agnostic, it is most commonly used to stream H264 video, which means there is no need to transcode for WebRTC. Currently, Broadcast Bridge supports H264 for video and Opus for audio with SRT, but Everycast Labs are looking at adding support for more codecs, and possibly even transcoding ability, if there’s a demand for it.

There’s also an opportunity for user-specified latency with SRT, which Broadcast Bridge may incorporate in future. Currently SRT latency in Broadcast Bridge is fixed at 300ms.

The repo is public, so feel free to have a look at how we added WHIP to Donut, and keep up to date with the project.

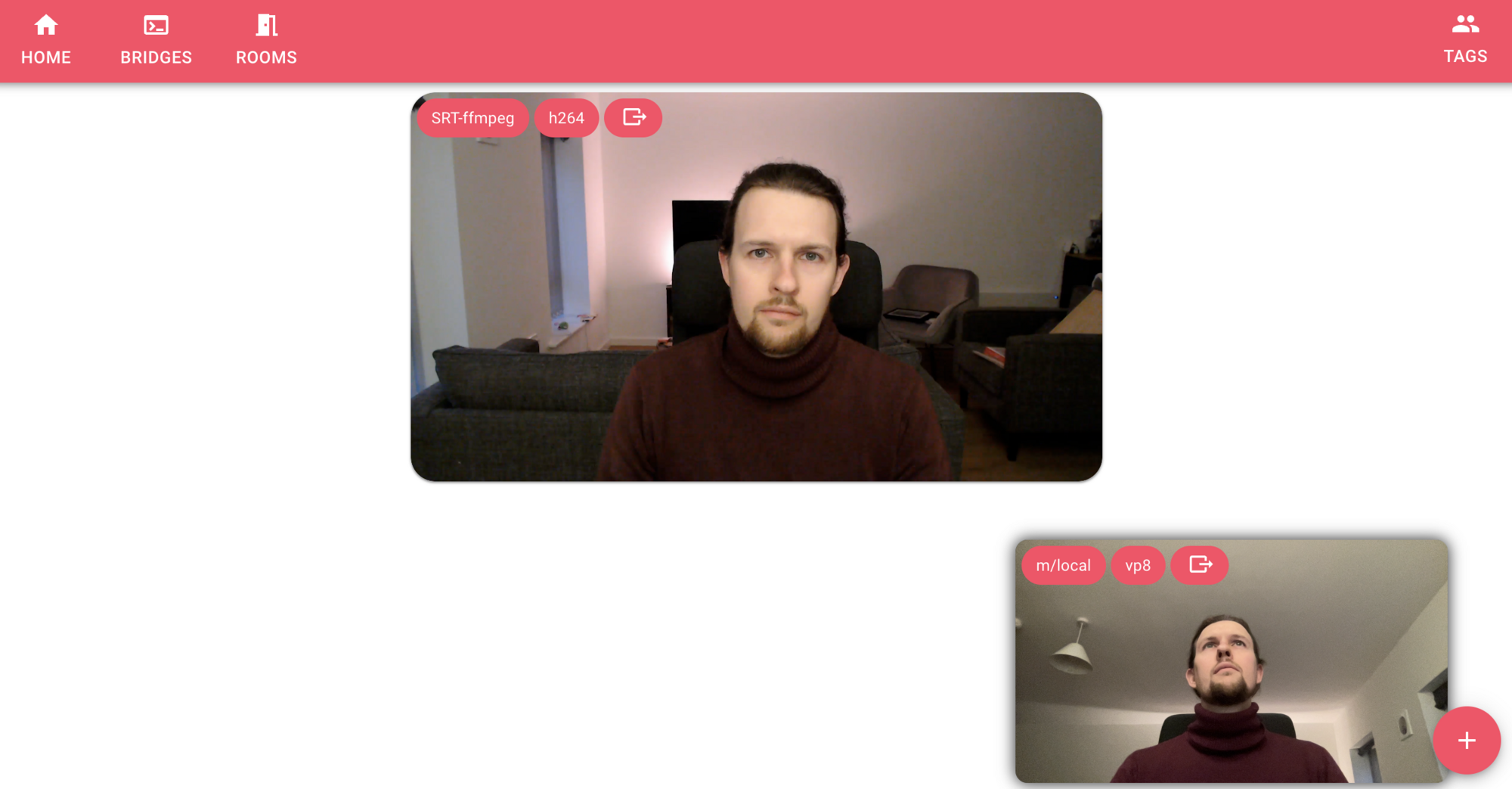

What it looks like for the user

Destination

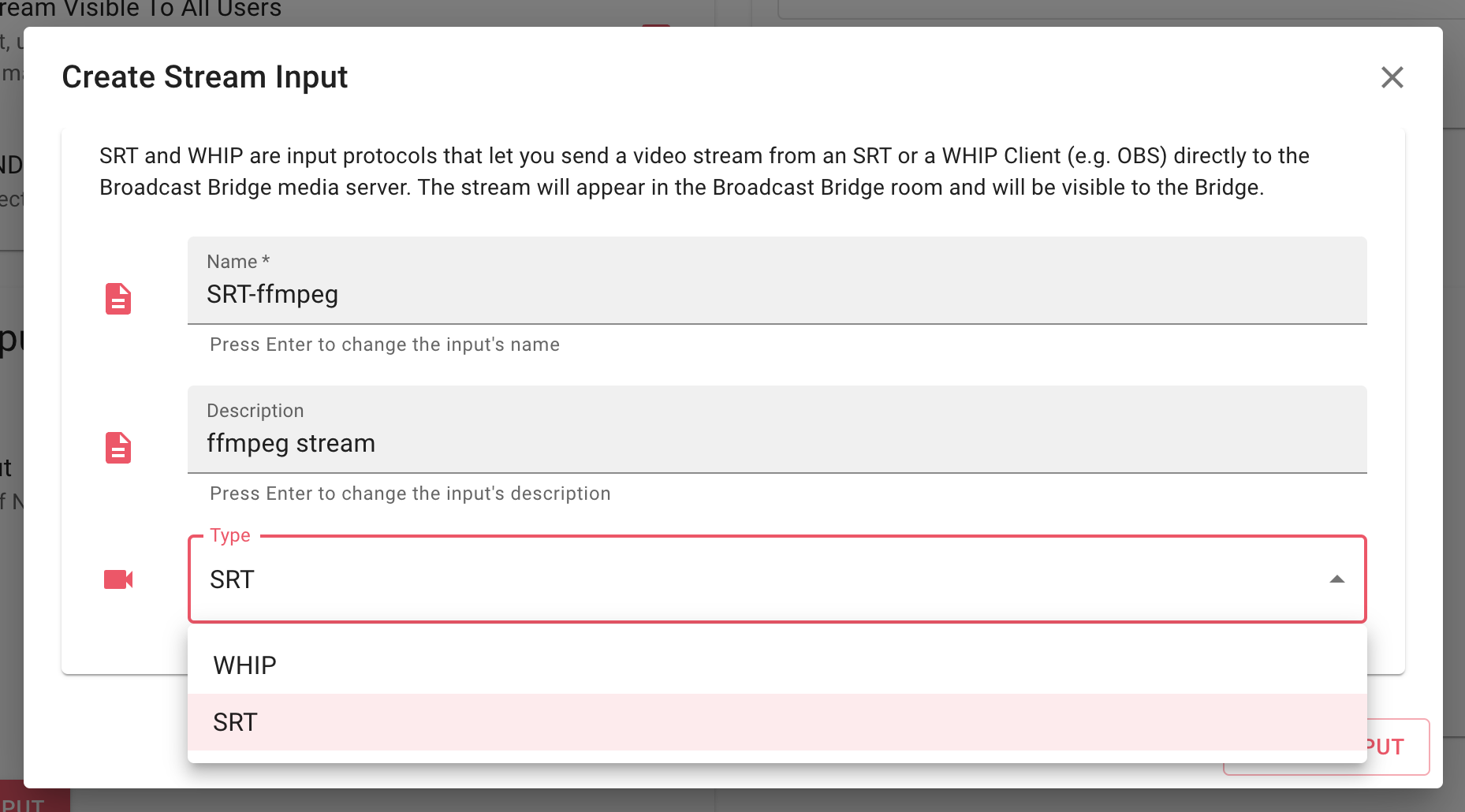

Let’s take a look at the user journey to start streaming a video to the Broadcast Bridge media server using SRT.

To create an endpoint, the user navigates to a Broadcast Bridge Room’s settings and adds a stream input from a dialog. In the background, this generates the URL needed to point the stream to the media server. The URL is automatically assigned the streamid and passphrase options so it can be accepted by the broadcast bridge server, and it looks something like:

srt://srt.uk.broadcastbridge.dev:5000?streamid=<streamID>&passphrase=<passphrase>

The stream ID identifies a media stream in a user’s room, while the passphrase contains the bearer token needed to authenticate with the media server.